Roblox Responds to Louisiana AG Lawsuit

SAN MATEO, Calif. – Roblox as a policy does not comment on pending litigation. However, the company would like to address erroneous claims and misconceptions about our platform, our commitment to safety, and our overall safety track record.

Every day, tens of millions of people around the world use Roblox to learn STEM skills, play, and imagine and have a safe experience on our platform. Any assertion that Roblox would intentionally put our users at risk of exploitation is simply untrue. No system is perfect and bad actors adapt to evade detection, including efforts to take users to other platforms, where safety standards and moderation practices may differ. We continuously work to block those efforts and to enhance our moderation approaches to promote a safe and enjoyable environment for all users.

-

At Roblox, we are constantly innovating safety tools and launching new safeguards. In the past year, Roblox has introduced over 40 new features to protect its youngest users and empower parents and caregivers with greater control, including updated parental controls, stricter defaults for users under 13, and new content maturity labels.

-

Roblox has taken an industry leading stance on age-based communication and recently introduced new age estimation technology to help confirm a user’s age through a simple, quick selfie-video. This technology allows us to provide a safer and more tailored online experience for our users by accurately confirming their age and unlocking appropriate features. Read more in this blog post.

-

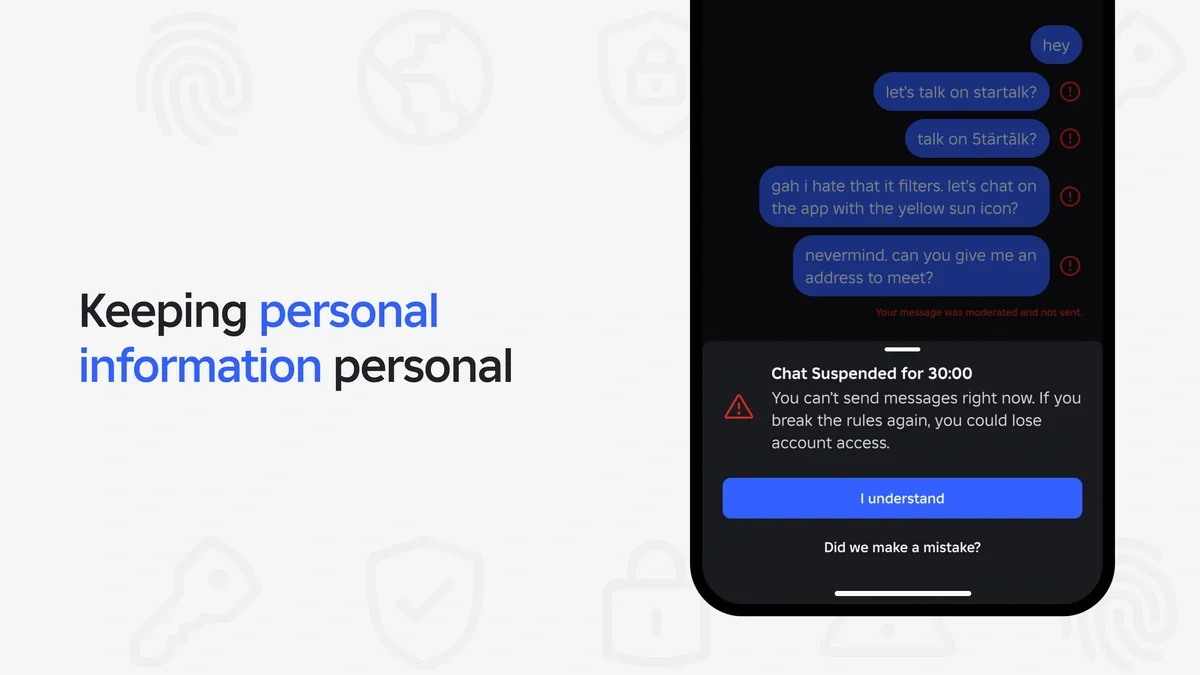

Roblox started as a platform for children, and while 64% of the user base is now 13 or over, the platform has rigorous safety features built in, and its policies are purposely stricter than those found on social networks and other user-generated content platforms. This includes rigorous text chat filters that block inappropriate language and attempts to direct users under 13 off the platform or solicit personal information. Furthermore, users under 13 cannot directly message others outside of games or experiences unless parental controls are adjusted, and direct image sharing between users is prohibited.

-

We dedicate substantial resources to help detect and prevent inappropriate content and behavior. Our Community Standards set clear expectations for how to behave on Roblox and define Restricted Experiences. We have both advanced AI models together with a large, expertly trained team with thousands of members dedicated to protecting our users and monitoring 24/7 for inappropriate content.

-

For inappropriate content: Our Trust & Safety team takes swift action (typically within minutes) to address violative material and accounts are removed through AI scans, user flags, and proactive monitoring, with a dedicated team focused on enforcement and swift removal. For instance, Diddy experiences violate our Real World Sensitive Events policy and we have a dedicated team working on scrubbing that content from the platform.

-

In August we released our latest open-source model, Roblox Sentinel, an advanced AI-powered system designed to help detect child endangerment interactions early for faster intervention. Human experts continue to be essential for investigating and intervening in the cases Sentinel detects. Read more in this blog post.

-

-

We know safety is critically important to families, and we strive to empower our community of parents and caregivers to help ensure a safe online experience for their children. This includes a suite of easy to use parental controls to provide parents with more control and clarity on what their kids and teens are doing on Roblox. Parents can:

- Block or limit specific experiences based on content maturity labels

- Block or report people on their child’s friends list

- See which experiences their child is spending the most time in

- Set daily screen time and spending limits

- Families and caregivers can find resources detailing our safety measures here. (See blog posts from November 2024 and April 2025, and new safety tools for teens.)

-

Roblox collaborates with law enforcement, government agencies, mental health organizations, and parental advocacy groups to create resources for parents and to keep users safe on the platform. Through vigorous global outreach, we’ve developed deep and lasting relationships with law enforcement at the international, federal, state, and local levels. For example, we maintain direct communication channels with organizations, such as the FBI and the National Center for Missing and Exploited Children (NCMEC), for immediate escalation of serious threats that we identify.

-

We proactively report potentially harmful content to NCMEC, which is the designated reporting entity for the public and electronic service providers regarding instances of suspected child sexual exploitation. In 2024, we submitted 24,522 reports to NCMEC (0.12% of the 20.3 million total reports submitted to NCMEC).

-

-

In addition, Roblox maintains relationships with multiple child safety organizations. Roblox has partnerships with over 20 leading global organizations that focus on child safety and internet safety, and when developing new products or policies, we work with these and other experts to get their input.

-

Safety is one of the most important challenges facing the online industry today. We strongly believe the industry needs to work together to move the needle on safety, which is why we actively collaborate cross-industry to provide others with better tools for detecting grooming in chats.

- We’ve been an active member of the Tech Coalition, a community of tech companies working together to protect children online, since 2018. We joined Lantern as a founding member in 2023. This first-of-its-kind cross-platform signal-sharing program enables participating companies to share information and take action on bad actors who move from platform to platform.

- We also joined the Robust Open Online Safety Tools (ROOST) initiative as a founding partner to share new safety technology that can help advance the industry as a whole, beginning with our voice-safety classifier.

We aim to create one of the safest online environments for users, a goal not only core to our founding values but contrary to certain assertions, one we believe is critical to our long-term vision and success. We understand there is always more work to be done, and we are committed to making Roblox a safe and positive environment for all users.

Contacts:

Roblox Communications