How Becoming a Founding Partner of a New Open-Source Initiative Is Key to Our Approach to Online Safety

- AI has become foundational to how we approach safety at Roblox, and we apply our models to text and voice communications, images, and 3D models and meshes.

-

Promoting user safety, especially for our youngest users, has always been our top priority, and that’s why we continue to invest in and improve our safety systems.

-

Contributing to the open-source community is important to us. And we’re expanding our leadership position in safety technology by becoming a founding partner of ROOST, a new nonprofit dedicated to tackling important areas in digital safety by promoting open-source safety tools.

-

We’re also open-sourcing a new version of our voice safety classifier model (a 94-million-parameter model processing up to 400,000 hours of active speech daily on our platform), and we plan on releasing additional open-source safety AI models in the future.

Promoting safety and civility has been foundational to Roblox since our inception nearly two decades ago. Designing systems to promote safety for everyone is a major effort, especially at our global scale and with all the different types of content we support. That’s why we invest heavily in infrastructure, AI, and humans. With hundreds of models in production, nearly every interaction on Roblox is powered in some way by AI.

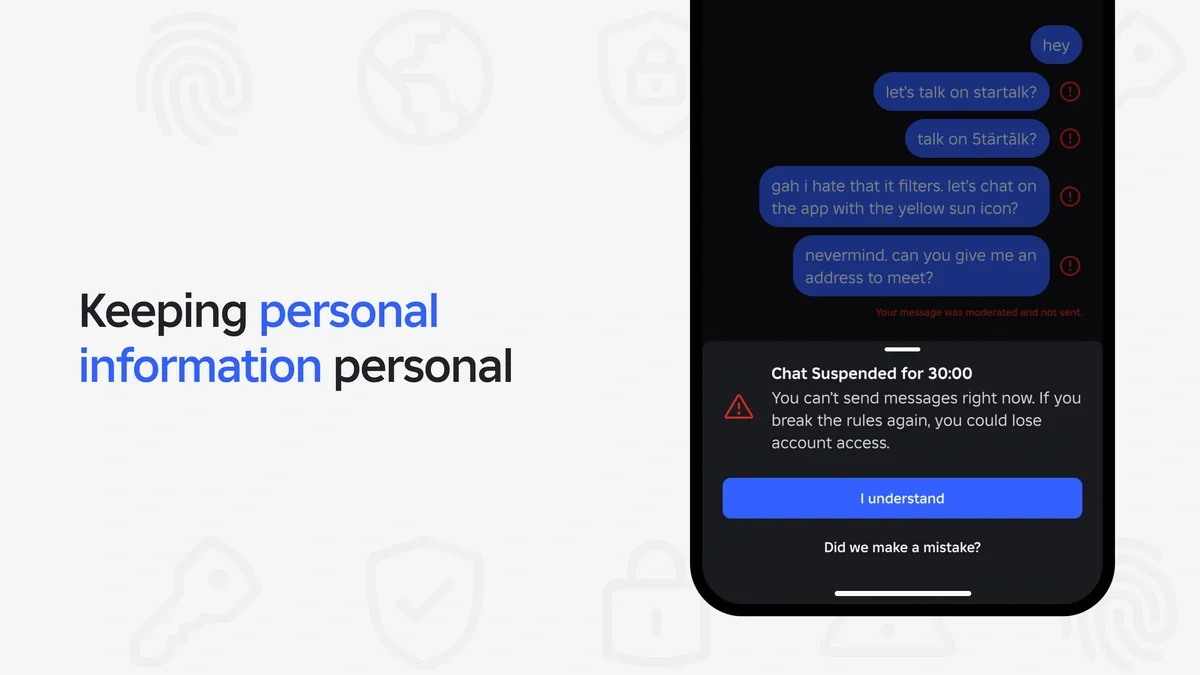

In the fourth quarter of 2024, our users uploaded more than 300 billion pieces of content spanning video, audio, text, voice chat, avatars, and 3D experiences. Yet only 0.01% of that content was detected as violating our policies, and most of that was not seen by users because it was proactively moderated. We’ve also invented fundamental high-performance AI to keep our full range of content and communication modalities safe. These models work across more than 4 billion text messages a day with just milliseconds of latency, and millions of voice hours and items of content. We’re committed to detecting bad content and making moderation decisions at scale.

Safety is something that transcends Roblox. While we’re able to help protect our users when they’re on our platform, we have limited power to do so when they’re not on Roblox. Indeed, internet companies all over the world face these challenges when their users move from platform to platform. So over the years, we’ve taken a leadership role in promoting online safety and civility practices by joining organizations like the Family Online Safety Institute and the Tech Coalition.

Now, we’re proud to expand our leadership position in online safety by becoming a founding partner—along with Google, OpenAI, Discord, and others—of ROOST (Robust Open Online Safety Tools). This new nonprofit is dedicated to addressing important areas in digital safety—especially online child safety—by building scalable, interoperable, and resilient safety tools suited for the AI era.

ROOST will develop, maintain, and distribute free open-source safety resources that public and private organizations of all sizes—many of which lack access to basic safety technology—can employ to augment their systems for keeping their users safe. This will let them focus more of their energies on growing their businesses.

We’re excited to help bolster safety across the internet, and to that end, we’ll also be a co-chair of ROOST’s technology advisory committee. This will give us the opportunity to share what we’ve learned, and to inform and support the organization’s work and technical strategy.

Becoming a founding partner of ROOST enables us to lean on the machine learning modeling expertise of the ROOST community to advance the safety techniques we both use and share. This is exciting because ROOST is working on three core areas of online safety that are mission-critical for Roblox and other online platforms. This includes:

-

Improving child safety, including developing stronger child sexual abuse material (CSAM) classifiers

-

Building better safety infrastructure, such as review consoles, heuristic engines, and collecting and curating more training examples, statistically sampling, labeling, and training humans on these use cases

-

Creating large language model (LLM)-powered content safeguards that leverage AI to revise and train moderators to enforce policies

“Having a global leader in online safety like Roblox join ROOST as a founding partner is a huge opportunity for us,” said ROOST Vice Chair of the Board Eli Sugarman. “With its demonstrated commitment to open source safety, Roblox is in a great position to share its innovative approach to help protect the entire online community."

This is a big moment for the entire online community, and our work on open-source tools is a major element of that. We think AI is a technology that should be built on transparency and openness, and we’re committed to being a strong partner in the open-source AI community.

Contributing Technology to the Open-Source Community

Being a founding partner of ROOST goes hand in hand with our approach to contributing technology to the open-source community. This is a journey we began last year when we open-sourced our voice safety classifier model, which can detect policy violations more accurately than human moderators at a scale of millions of minutes of voice activity a day. Since then, it’s been downloaded nearly 22,000 times.

We’ve now got an updated version of that model in production that handles seven new languages—Spanish, German, French, Portuguese, Italian, Korean, and Japanese—and applies new techniques that make the model more effective. These include a curriculum training architecture for human label fine-tuning, expanding abuse heads, and adding more efficient feature extraction and time reduction layers. We plan on open-sourcing the new model version by the end of the first quarter of 2025. Looking ahead, we also plan on open-sourcing classification models for other modalities later this year.

Open-sourcing our tools is part of who we are, both with some of our safety work and for creation as well. Last year, for example, we unveiled our 3D Foundational Model, which will help creators integrate many types of auto-generation tools into their experiences.

We’re excited to be a founding partner of the ROOST community, and we aim to collaborate with other leaders in the field and contribute all we can to making the internet a safer place.