Tech Talks Episode 28: Update On Our Safety Initiatives

00:02 Introduction

“Welcome, I'm David Baszucki, founder and CEO at Roblox, and you're listening to Tech Talks, where we talk about everything that's happening at Roblox. We have a fast follow today…Hot on the release of facial age estimation, Trusted Connections, and a slew of other things…we’re going to dive into some new things we're releasing.”

04:13 Why We Renamed Friends to Connections

David Baszucki: “We did something that was arguably controversial, because we're thinking about the future… A feature that was really in the very first spec of Roblox, almost 20 years ago, was a feature called Friends. We renamed that to Connections and Trusted Connections.”

6:28 Sensitive Issues

Eliza Jacobs: “This launch was really about giving parents more visibility and more choice into the things that our youngest users are engaging with on the platform. And one of the things we know about our community is we have a global UGC community of creators that, as you said, are just explosively creative. What that means is that, for super sensitive topics, families might have very different opinions on them, or might want to approach them with their kids in very sensitive ways… So we're giving parents the choice to either enable access to games that are primarily themed on those topics, or not.”

12:48 Vigilantes

Matt Kaufman: “I want to start off by saying that both inside of Roblox, and there are lots of people outside of Roblox who really have the best intentions and the welfare of our community at heart. We all want to keep Roblox safe, and we all prioritize the safety of kids, in particular, above everything else…. And we have members of the community in these vigilante groups that started off in a really good place.”

21:11 Question 1: Why Didn’t We Act Faster on Vigilante Activity?

Matt Kaufman: “We have systems in place to watch all of this activity. And…we saw some of this vigilante behavior, and we decided to take a slow approach to it and understand what was happening before we decided to act… We were also thinking carefully about our policies, and thinking carefully about making sure that we had collected the evidence internally of the policy violations, and we really understood what was happening before we acted.”

23:37 Question 2: Are We Doing Enough as a Platform?

Eliza Jacobs: “I think there's always more we can be doing, and we will continue to evolve our policies and our products to respond to new threat vectors and new forms of abuse. We're working now on a policy update around games that have intimate spaces in them, so that's things like bedrooms and bathrooms... And you'll soon see an update from us on that front. We're also working on a community safety council.”

25:26 Open Sourcing Our Safety Technology

Matt Kaufman: “We feel like this is an opportunity for Roblox to really help with the entire industry, not just what's happening on Roblox. We're taking a much more proactive stance in open sourcing key technology that we have on the platform. We open sourced our voice moderation model last year, and just recently, we open sourced a new model that helps with the detection of grooming and other types of harms that evolve over a long period of time."

26:49 Question 3: What About These Specific Games?

Eliza Jacobs: “One thing that's really challenging on this global, immersive, interactive platform is that we have content created by developers and creators, and content created by the users in that game. And one thing we really try to do is be fair to our developers, and to not moderate them for the behavior of their users, right? That doesn't seem fair at all, then we're taking a game down because users were in it behaving badly. That being said…we're investing in what we're calling bad scene detection, so that we can moderate in the moment those servers and take them down if users are behaving badly.”

30:47 How to Report

Eliza Jacobs: “Users can flag anything that they see that is violative. They can flag usernames, they can flag games, they can flag all kinds of things. The most important thing is that they provide as much information as possible in the report so that our teams can investigate it. That is really critical, right? Because again, we want to be fair to our community and make sure that we've observed the bad behavior, that we have evidence of it. Then we can tell folks when they violated the policies, and also that there's always an avenue to appeal. So if we've gotten it wrong, they can appeal, and we can, again, take a look at that evidence.”

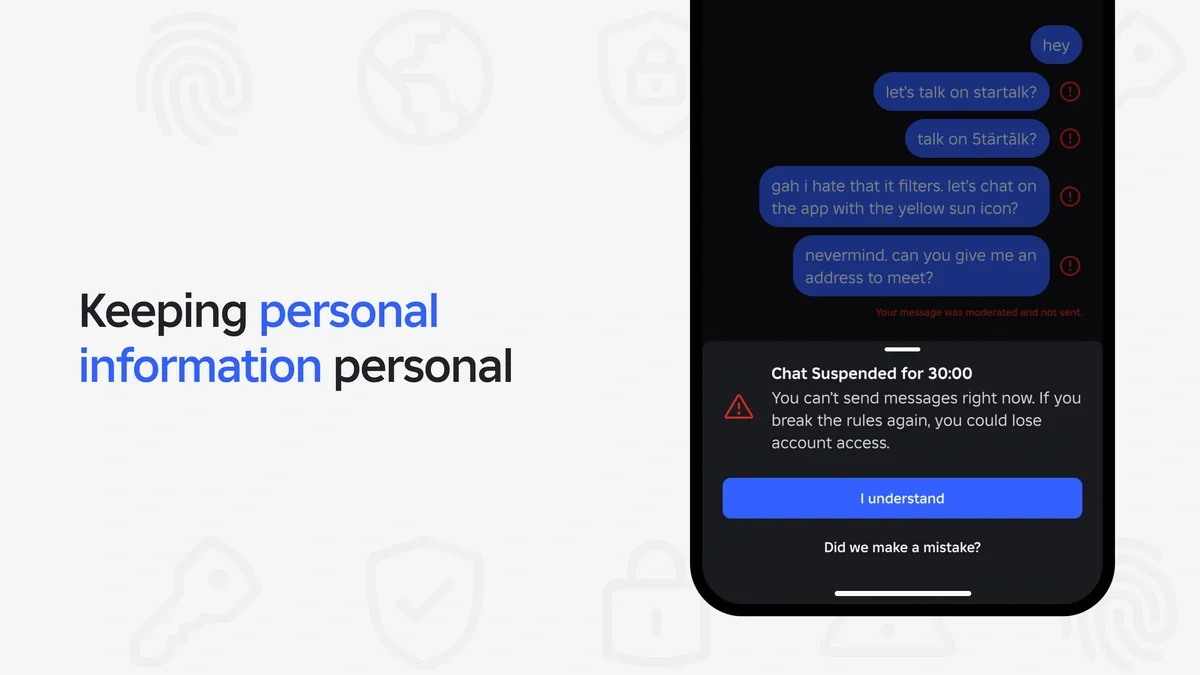

36:34 Open Sourcing Voice Safety and Civility

Matt Kaufman: “What we've seen online is a lot of people who come to Roblox, they don't know the rules. And for most people, it just takes a little bit of nudging to course-correct and get them on a path which is more civil. We had to figure out a way of doing that in real time… We built a model that goes straight from the actual voice communication, what people are saying, and is able to measure whether that adheres to our policies or not. And we were so excited about the results from that model that we open-sourced it.”

40:01 Open Sourcing Roblox Sentinel

Matt Kaufman: "Roblox Sentinel is a model that we open sourced a few weeks ago. And what that model is for is looking for long-term behavior patterns which result in violations of our policies… Really casual conversations, like, 'Hey, how's your day? You want to go play this game?' Slowly over time, may evolve into something that's much more violative. It may evolve into moving that user to a different platform. And those things are hard to detect in the moment.”