Introducing Roblox Cube: Our Core Generative AI System for 3D and 4D

- We are releasing our Cube 3D foundation model for generative AI.

- We are also open-sourcing a version of the Cube 3D foundation model.

- The beta version of Cube 3D mesh generation—in Roblox Studio and as an in-experience Lua API—will be available this week.

Last fall, we announced an ambitious project to build an open-source 3D foundation model to create 3D objects and scenes on Roblox. This week, we are open-sourcing the first release for this model to make it available for use by anyone on or off the Roblox platform on both GitHub and HuggingFace. We’ve named this model Cube 3D. We are also launching the first of its capabilities, with the beta launch of our mesh generation API. Cube will underpin many of the AI tools we’ll develop in the years to come, including highly complex scene-generation tools. It will ultimately be a multimodal model, trained on text, images, video, and other types of input—and will integrate with our existing AI creation tools.

Cube 3D generates 3D models and environments directly from text and, in the future, image inputs. Today, state-of-the-art 3D generation uses images and a reconstruction approach to build 3D objects. This is a good option when there isn’t sufficient 3D training data. However, thanks to the nature of our platform, we train on native 3D data. The generated object is fully compatible with game engines today and can be extended to make objects functional.

The difference here is similar to a racetrack movie set. On TV, you might see what looks like a fully functional racetrack, with stands, garages, and a victory lane. But if you were to walk around on that set, you’d quickly realize that the structures were actually flat. Building a truly immersive 3D world requires complete, functional structures, with garages you can drive into, stands you can sit in, and a victory lane with a functional podium.

To achieve this, we’ve taken inspiration from state-of-the-art models trained on text tokens (or sets of characters) so they can predict the next token to form a sentence. Our innovation builds on the same core idea. We’ve built the ability to tokenize 3D objects and understand shapes as tokens and trained Cube 3D to predict the next shape token to build a complete 3D object. When we extend this to full scene generation, Cube 3D then predicts the layout and recursively predicts the shape to complete that layout.

Anyone can fine-tune, develop plug-ins for, or train Cube 3D on their own data to suit their needs. We believe that AI tools should be built on openness and transparency, which is why we are a committed partner in the open-source AI community. We released one of our AI safety models because we feel strongly that sharing advancements in AI safety helps the entire industry accelerate innovation and technical advancements. For this reason, we also helped found ROOST, a new nonprofit dedicated to tackling important areas in digital safety with open-source safety tools. In open-sourcing Cube 3D, our goal is to enable researchers, developers, and the broader AI community to learn, augment, and advance 3D generation industry-wide.

Cube 3D for Creation

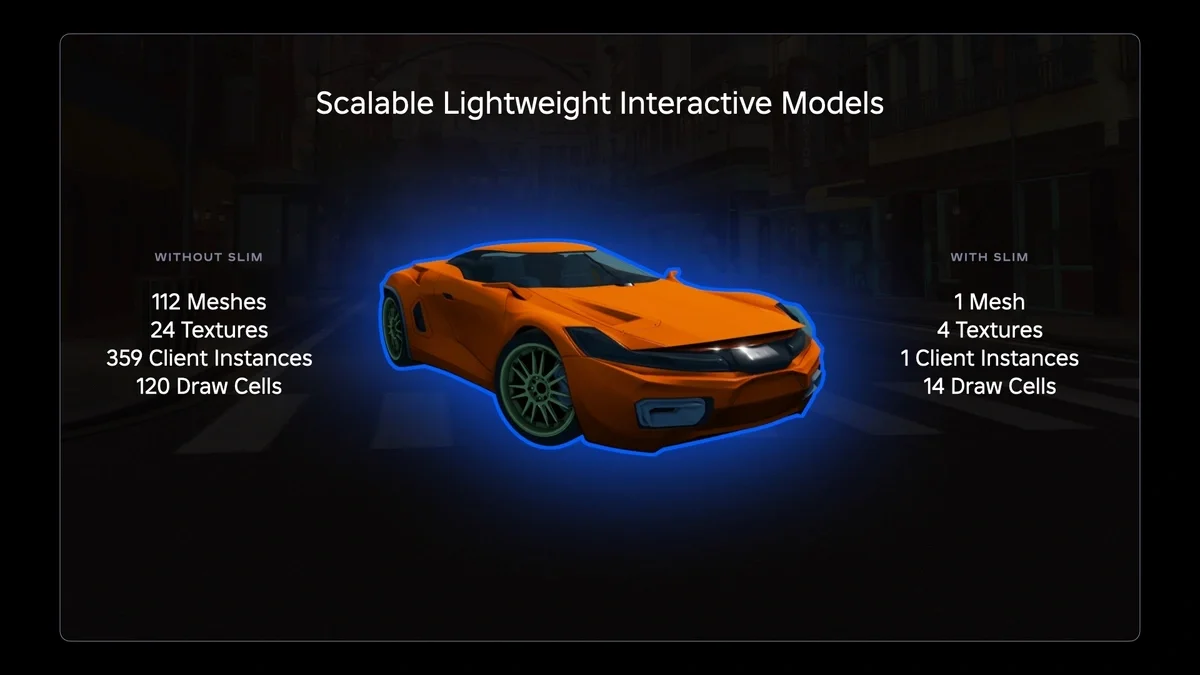

We’ve spoken previously about how AI can accelerate the creation of 3D assets, accessories, and experiences. Ultimately AI will enable even more immersive and personalized play and connections. We invest in infrastructure to support AI at every stage of the creation cycle—for both the developers of these experiences and the users who spend time in them. We envision a future where developers will give their users new ways to create by enabling AI in their experiences. This puts the power of AI in the hands of more than 85 million daily active users as part of their gameplay.

In the past year, we’ve introduced several new features through our AI-powered Assistant within Roblox Studio to provide developers with the tools and capabilities they need to create and eliminate hours of manual work. With Cube, we intend to make 3D creation more efficient. With 3D mesh generation, developers can quickly explore new creative directions and increase their productivity by deciding rapidly which to move forward with.

Imagine building a racetrack game. Today, you could use the Mesh Generation API within Assistant by typing in a quick prompt, like “/generate a motorcycle” or “/generate orange safety cone.” Within seconds, the API would generate a mesh version of these objects. They could then be fleshed out with texture, color, etc. With this API, you can model props or design your space much faster—no need to spend hours modeling simple objects. It lets you focus on the fun stuff, like designing the track layout and fine-tuning the car handling. This API saves hours on each object created and gives you back that time to experiment with new ideas without worrying about spending too much time or effort. Longer term, we plan to enable more complex and functional objects, even scenes.

3D objects generated with Cube

This technology extends to the tens of millions of creative people who play and connect on Roblox every day. We see a future where developers enable their users to become creators using AI. With the Mesh Generation API enabled, players can bring to life anything they can imagine. If a player wants a futuristic car, they can just type “red car of the future with side wings” or “black leather motorcycle jacket” and see it generated. This kind of in-game AI generation is going to unlock a whole new level of creativity. Players can personalize their experience in ways developers never imagined, and that’s going to make their games even more engaging.

Under the Hood: Cross Attention Between 3D and Text/Image Tokens

The key technical challenge was to connect text and images with 3D shapes. Our core technical breakthrough is 3D tokenization, which allows us to represent 3D objects as tokens in the same way that text can be represented as tokens. This gives us the ability to predict the next shape just as language models predict the next word in a sentence.

To achieve 3D generation, we designed a unified architecture for autoregressive generation of single object, shape completion, and multiobject/scene layout generation. Autoregressive transformers are neural networks that use previous inputs to predict the next component. This architecture provides both scalability and multimodal compatibility so that as we expand the model, it will work with many different kinds of input (text, visual, audio, and 3D). We are open-sourcing this model. In this initial stage, creators will be able to generate 3D objects based on text prompts. Down the road, we intend for creators to be able to generate entire scenes based on multimodal inputs.

To train a generative pretrained transformer (GPT) for shape generation, we use discrete 3D shape tokens and align them with text prompts. This novel approach sets us up for the world of 3D scene generation that’s playable.

Where Cube Is Heading

Today, much of the world uses AI for text, to predict words in a sentence. Many also use it for images, to predict pixels. This gets much more complex when creating scenes, where all of these elements come together and need to work in context with one another. For example, imagine an experience with a simple scene that can be described as “an avatar on a motorcycle in front of a racetrack with trees.”

Many elements go into building this experience. The trees are a combination of two 3D meshes, the motorcycle is a dense mesh with details and triangles, and the buildings are made up of Roblox parts. The avatar on the motorbike has more complex geometric features for its body, limbs, and head. Finally, we need a way to tie it all together with a layout. For that, we need bounding boxes, which outline an object to define its size and location, to know how to arrange this geometry. This is a painstaking process, but AI is capable of helping with each step. With AI, creators can get to the first version faster and have more time to test new ideas or refine their scene.

When we get there, we want the 3D objects and scenes we create to be fully functional. We call this 4D creation, where the fourth dimension is interaction between objects, environments, and people. Achieving this requires the ability not only to build immersive 3D objects and scenes, but also to understand the contexts and relationships between those objects. This is where we are heading with Cube.

Beyond this first use case of mesh generation, we plan to extend to scene generation and understanding. We’ll be able to serve users the experiences they’re most interested in and to augment scenes by adding objects in context. For example, in an experience with a forest scene, a developer could ask Assistant to replace all the lush green leaves on the trees with fall foliage to indicate the change of season. Our AI Assistant tools react to requests from the developer, helping them rapidly create, adapt, and scale their experiences.

We’ll share updates and new functionality as we continue improving and expanding our foundation model. Until then, we hope you enjoy using and building on top of our open-source version of the Cube 3D model, which you can access on GitHub and HuggingFace.