Roblox’s Road to 4D Generative AI

-

Roblox is building toward 4D generative AI, going beyond single 3D objects to dynamic interactions.

-

Solving the challenge of 4D will require multimodal understanding across appearance, shape, physics, and scripts.

-

Early tools that are foundational for our 4D system are already accelerating creation on the platform.

Roblox empowers creators to build immersive 3D experiences, avatars, and accessories by providing the tools, services, and support they need to bring their ideas to life. It’s these creators who build the vibrant content on our platform, which engages more than 77 million daily active users (as of Q1 2024). Through our free Roblox Studio app, we’ve released a suite of generative AI tools that are uniquely designed for Roblox workflows and trained on Roblox-specific content.

These tools make creation easier, more efficient, and more fun for experts and novices alike. Assistant enables 3D workspace editing, Animation Capture enables face and body motion, Code Assist helps with script editing and creation, Material Generator enables tiling material appearance, and Texture Generator enables asset-specific texture mapping. Each of these generative AI tools enhances one part of the 3D creative process.

Together, these tools augment a creator’s skillset and reduce time from concept to completion. We’ve built these utilizing our own innovative research breakthroughs as well as best-of-breed solutions from the larger AI ecosystem. They address creation of individual assets in 1D (scripts), 2D (surfaces), and 3D (spaces). We preview some of the results from our 3D geometry generation and editing lab at various international research conferences, including our own Roblox Developers Conference.

Across the industry, 1D and 2D are state of the art, and 3D is at the cutting edge of generative AI. Each is an increasingly significant challenge that continually drives exciting technical advances. As we live in 3D space, it may seem like that is the ultimate generative AI challenge. However, based on the needs of our community, our vision for this work extends even further.

Where We Are Today

We are working toward 4D generative AI, where the fourth dimension is interaction. The power of Roblox’s online platform is interaction—between people, objects, and environments. Unlike traditional online video games, Roblox’s powerful runtime engine leverages a unique programming and simulation model focused on interaction. This model is inspired by the concept of a metaverse, where elements meet in complex, many-to-many, and spontaneous ways, rather than in proscribed and limited ways.

1D, 2D, and 3D generative AI tools produce individual assets. The challenge we face with 4D generative AI is in bringing those assets to life in ways that enable unrestricted interactions appropriate for our platform. This means, for example, that an avatar is not just shape and color—it’s also a skeleton, animations, and the ability to grasp tools and balance. That avatar can wear clothing that was not designed specifically for it and that automatically adjusts to fit perfectly and tracks all motion. Our new Avatar AutoSetup tool is an early example of how generative AI can help automate this type of creation. Developers can now complete this process in minutes rather than hours or days.

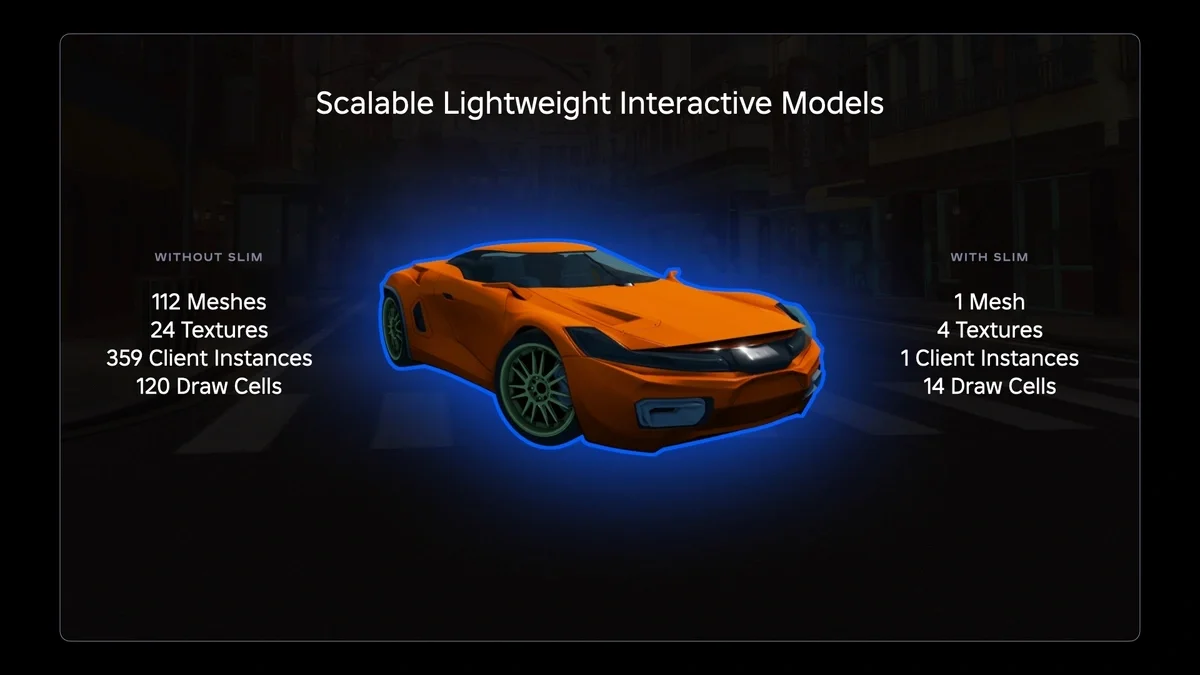

A sports car is not just a sleek shape and surface paint—it’s also the engine, movable parts, and physics rig that enable it to roar down virtual streets with precision and control. In each case, the object is extended from 3D to interact with all its parts through physics and with a user through their avatar.

Each of these richly interactive 4D elements can be added into a larger environment where the generative AI harmonizes the style of each element and injects interactive support between the objects and with the environment. Now a user, through their avatar, can drive in a street race with damage modifiers and high scores, and skid to a stop at a branded fashion store, where they shop for new clothing to celebrate their win.

Today, creating such experiences requires manual creation of the script source code, the workspace and data model structure, 3D geometry, animations, and materials. Our existing generative AI tools help with each portion of the pipeline. We are building a system that will connect all of these elements and generate them simultaneously. To achieve this, we must train our 4D generative AI system in a multimodal manner, meaning across multiple types of data together. This is already done for images and text, which power Material Generator. Enabling interaction and adding purpose-built optimizers for physics is how we’ll reach the next level of 4D capability.

In just the past year, we’ve seen enormous changes in how content is created on Roblox. Looking ahead, we see a future where anyone, anywhere, can bring an idea to life by simply typing or speaking a command. To get there, we need to begin to solve some of the challenges we’ll meet on the road.

The Challenges Ahead of Us

The experiments we shared above will be available in the near future. Further out, we face three clear challenges we’ll need to unlock:

1. Functional: The objects created by this future generative AI tool need to be functional. It’s about the system looking at a truck or a plane where you have the 3D shape — and treating it not as a sealed opaque object. And without the creator having to intervene, it can automatically recognize, these are the parts that need to have joints, or this is where the mesh needs to open.

This is a human-level AI problem these systems need to solve—to look for correct wheel placement, for example, and then to add an axle for the wheels so that they operate in the same way they would in the physical world. And to look for where the door is and then cut an opening and add hinges so the door can open and close.

2. Interactive: Items created with this future generative AI also need to be able to not only function independently but also interact with other objects in the environment. So now that the system has created for us a car with a door that opens and wheels that turn, it needs to understand the physics of the world the car is placed into. How does the vehicle move on the terrain? If it crashes into a boulder, where and how does it crumple, based on the size of the boulder and the speed of the vehicle?

This complex challenge requires both the object created and the environment or objects it interacts with to understand each other’s physics. Luckily, Roblox has a leg up in this aspect, as the platform was built as a physics engine, which means that all objects in experiences can be physical. When generative AI creates a 4D object, physical qualities such as material, mass, and strength will also be added to prepare it to interact with other physically based objects in the world.

3. Controllable: Today, we interact with generative AI using prompts. This is an imperfect science, akin to a scavenger hunt. Someone asking for an image of a bunny could receive a huge variety of results: a real rabbit, a chocolate Easter bunny, a cartoon bunny, a painting of a rabbit, or an illustration of a rabbit wearing a coat. So we refine the prompts, asking for photorealistic images or images “in the style of” as we dial in the vision that’s in our heads. This takes time and repeated attempts to get closer to what we’re looking for.

Imagine trying to follow this process for a 3D object that functions and interacts with other objects, such as the truck in our example above. Prompt engineering at this level would be exponentially complex—not something that anyone could easily use. To bring a creator’s idea to life, we need a faster, easier way to communicate and refine, essentially collaborating with an AI assistant that’s more of a partner and less of a scavenger hunt.

This is an industry-wide challenge, and many companies are working to bring greater controllability into generative AI. We’ve made some progress here with tools such as ControlNet, which increases control by allowing the creator to provide additional input conditions beyond just text prompts. We’re currently exploring other methods that show promise for a satisfying workflow, such as having the AI pause after critical steps to await user input. But we have a long way to go to achieve a seamless experience.

We’re excited about the impact we’ve seen so far and even more excited about what’s ahead. Compared with creators who aren’t using the beta for Material Generator, those who are using it have increased their use of physics-based rendering (PBR) material variations by more than 100 percent compared—up from just over a thousand in March 2023 to over two thousand in June 2024. As of June 2, 2024, creators have adopted approximately 535 million characters of code suggested by Code Assist.

As we begin to solve the challenges on this road to 4D, our creators will be able to create more, faster. We also expect to see a greater diversity of experiences on Roblox as we make it possible for more people to become creators. What they build and how they build it will show us where to invest in new tools and AI algorithms to empower these new creators, alongside our existing community.

With 4D generative AI, Roblox has opened up a new frontier for experience and asset creation. While the challenges are new, our process for innovation is well honed. We combine our top-notch internal research and development teams, university collaborations, and rapid iteration on prototypes in partnership with our community.